SGLang Day 0 Support for DeepSeek-V3.2 with Sparse Attention

by: The SGLang Team, Sep 29, 2025

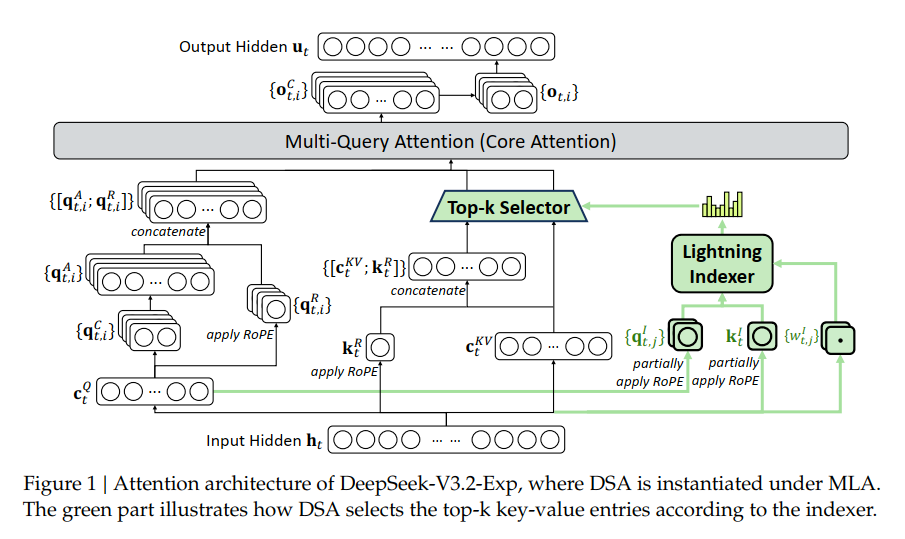

We are excited to announce that SGLang supports DeepSeek-V3.2 on Day 0! According to the DeepSeek tech report, it equips DeepSeek-V3.1-Terminus with DeepSeek Sparse Attention (DSA) through continued training. With DSA, a fine-grained sparse attention mechanism powered by a lightning indexer, DeepSeek-V3.2 achieves significant efficiency improvements in both training and inference, especially in long-context scenarios. For more details about upcoming features, please check our Roadmap.

Installation and QuickStart

To get started, simply pull the container and launch SGLang as follows:

docker pull lmsysorg/sglang:v0.5.3-cu129

python -m sglang.launch_server --model deepseek-ai/DeepSeek-V3.2-Exp --tp 8 --dp 8 --enable-dp-attention

For AMD (MI350X/MI355X):

docker pull lmsysorg/sglang:dsv32-rocm

SGLANG_NSA_FUSE_TOPK=false SGLANG_NSA_KV_CACHE_STORE_FP8=false SGLANG_NSA_USE_REAL_INDEXER=true SGLANG_NSA_USE_TILELANG_PREFILL=True python -m sglang.launch_server --model-path deepseek-ai/DeepSeek-V3.2-Exp --disable-cuda-graph --tp 8 --mem-fraction-static 0.85 --page-size 64 --nsa-prefill "tilelang" --nsa-decode "aiter"

SGLANG_NSA_FUSE_TOPK=false SGLANG_NSA_KV_CACHE_STORE_FP8=false SGLANG_NSA_USE_REAL_INDEXER=true SGLANG_NSA_USE_TILELANG_PREFILL=True python -m sglang.launch_server --model-path deepseek-ai/DeepSeek-V3.2-Exp --disable-cuda-graph --tp 8 --mem-fraction-static 0.85 --page-size 64 --nsa-prefill "tilelang" --nsa-decode "tilelang"

For NPU:

# NPU A2

docker pull lmsysorg/sglang:dsv32-a2

# NPU A3

docker pull lmsysorg/sglang:dsv32-a3

python3 -m sglang.launch_server --model-path deepseek-ai/DeepSeek-V3.2-Exp --trust-remote-code --attention-backend ascend --mem-fraction-static 0.85 --chunked-prefill-size 32768 --disable-radix-cache --tp-size 16 --quantization w8a8_int8

Description

DeepSeek Sparse Attention: Long-Context Efficiency Unlocked

At the heart of DeepSeek-V3.2 is DeepSeek Sparse Attention (DSA), a fine-grained sparse attention mechanism that redefines long-context efficiency.

Instead of performing quadratic full attention over all tokens, DSA introduces:

- Lightning Indexer (ultra-light FP8 scorer) to identify the most relevant tokens for each query.

- Top-k Token Selection to focus computation only on the most impactful key-value entries.

This design reduces the complexity of core attention from O(L^2) to O(Lk), delivering dramatic improvements in both training and inference efficiency at up to 128K context length, with negligible loss of model quality.

To support this breakthrough, SGLang implements and integrates:

- Lightning Indexer Support – with a dedicated

key&key_scalecache in the memory pool for ultra-fast token scoring. - Native Sparse Attention (NSA) Backend – a new backend purpose-built for sparse workloads, featuring:

- FlashMLA (DeepSeek’s optimized multi-query attention kernel)

- FlashAttention-3 Sparse (adapted for compatibility and maximum kernel reuse)

- Additional work: supporting different page sizes within one attention backend:

- Indexer

key&key_scalecache requires page size = 64 (from the kernels provided in DeepSeek) - Token-level sparse forward operator requires page size = 1

- Indexer

Together, these innovations enable DeepSeek-V3.2-Exp to deliver GPU-optimized sparse attention and dynamic cache management, cutting memory overhead while scaling seamlessly to 128K contexts.

The result is a runtime that preserves state-of-the-art reasoning quality, while dramatically lowering inference costs—making long-context LLM deployment not only possible, but also practical at scale.

Future Work

Future work will be tracked here. More specifically, we plan to:

- Multi-token prediction (MTP) support coming soon: The MTP will speed up decoding, especially when the batch size is not large.

- FP8 KV Cache: Compared to traditional BF16 KV cache, this will almost double the number of tokens in KV cache as well as halving the memory access pressure of attention kernels, making it possible to serve longer or more requests faster.

- TileLang support: TileLang kernels are useful for flexible development.

Acknowledgments

We sincerely thank the DeepSeek team for their outstanding contributions to open model research, which have greatly benefited the open-source community, as well as for their highly efficient kernels that are now integrated into the SGLang inference engine.

From the SGLang community, we thank Tom Chen, Ziyi Xu, Liangsheng Yin, Biao He, Baizhou Zhang, Henry Xiao, Hubert Lu, Wun-guo Huang, Zhengda Qin and Fan Yin for their contributions to DeepSeek-V3.2-Exp support.

We also thank NVIDIA, AMD, and Nebius Cloud for sponsoring the GPU machines used in the development of this work.